On a related note, all Computer Science undergrads have to take a class called "Discrete Mathematics" in about their sophomore year. For many it is a weed-out class - it almost was for me and I confess to having to take it more than once to get past it. Now, most CS students tend to be heavily oriented to the "applied science" end of the spectrum but Discrete Math is a very abstract hodgepodge of logic, probability, functions and relations, and graph theory. At the time I saw no reason whatsoever to be taking that stuff. 25 years later I can look back and see exactly why I was taking it.

Case in point: while studying genome assembly methods I found out that for data produced by short read sequencers, the kind that are fast and cheap to use and popping up in every lab and hospital, a building a type of graph is one of the most common assembly methods.

Not Your Father's Kind of Graph

I'm not talking about a graph that is an X, Y plot of a parabola or something. In graph theory you have "nodes" and "edges." Still essentially dots and lines, but it's not drawn on an XY axis and many times the graph's lines have a direction, pointing to the next dot (node). In 1735, Leonard Euler (pronounced "Oiler" of all things), worked on this problem called "The Seven Bridges of Koenigsberg" in which the city was essentially an island surrounded by the Pregel River. The city had seven bridges to the mainland and the problem was to start at any bridge and walk a path that went over every bridge exactly once. |

| Modified by Bogdan Giuşcă - for Wikipedia |

Euler said that the path over land to the bridge is irrelevant. This leaves 4 land areas and 7 lines connecting them. That information could be redrawn like this:

|

| Created by [http://en.wikipedia.org/wiki/User:Mark_Foskey Mark_Foskey] |

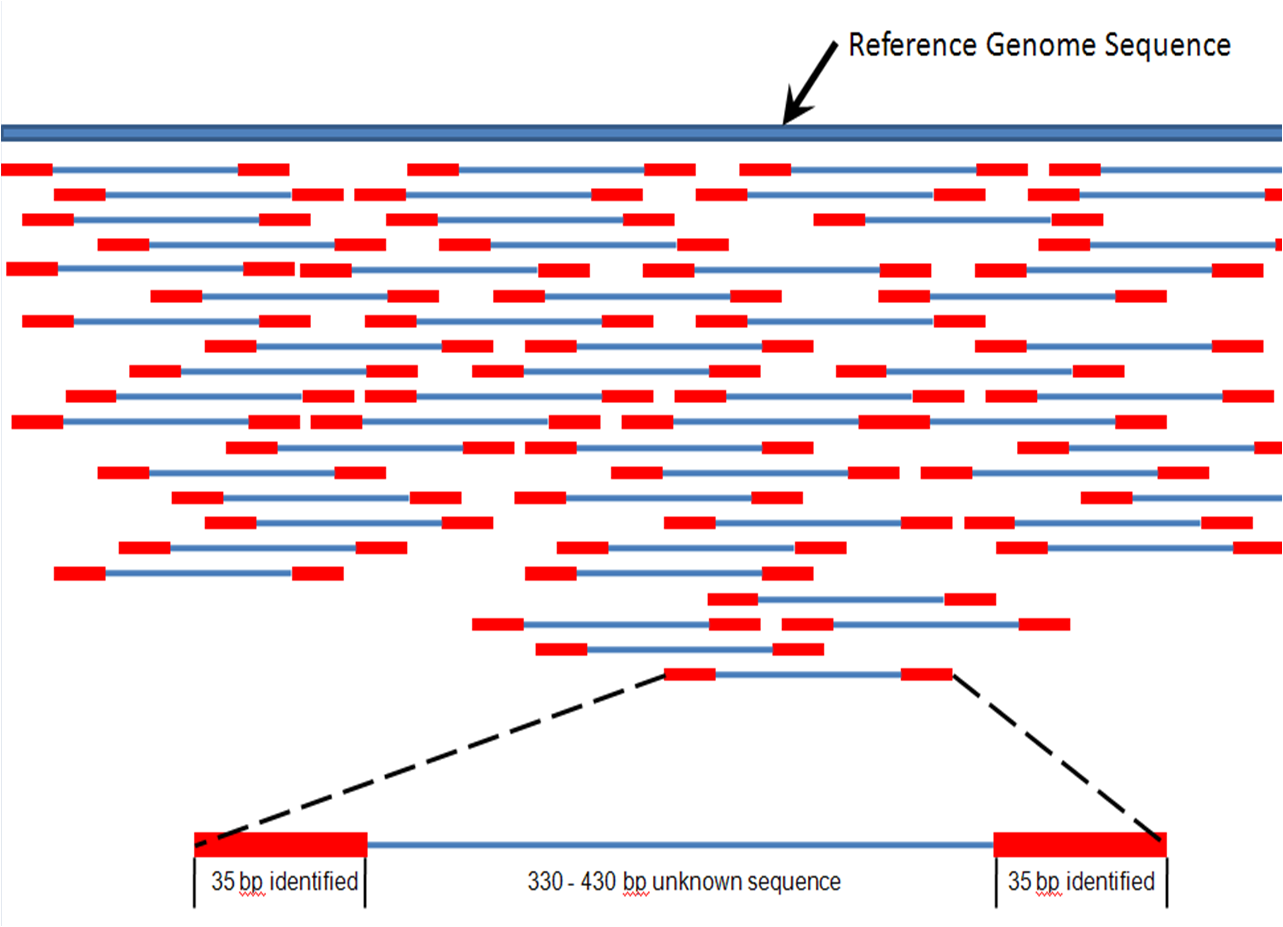

Somewhat counterintuitively, the de Bruijn method divides the short read data into a uniform size and then builds a graph out of the overlaps of some specified size. Maybe an example will help.

Imagine we have a sequence CTGAAGTCCAGTACA and our uniform size (k-mer) is 5 (or 5-mer) then overlaps would look something like this:

CTGAAGTCCAGTACA

CTGAA

.TGAAG

..GAAGT

...AAGTC

....AGTCC

.....GTCCA

......TCCAG

.......CCAGT

........CAGTA

.........AGTAC

..........GTACA

As you can see, each node is shifted one space to the right. Representing it more like a graph:

CTGAA -> TGAAG -> GAAGT -> AAGTC -> AGTCC -> GTCCA -> TCCAG ------> CCAGT -> CAGTA -> AGTAC ->GTACA

Part of the key to all of this is that there are many copies of each read. If we add in the concept of a "weight" for each line, then every time we draw an edge (line) from one node to the next we can increase the weight and when it is all over, the path with the heaviest weight is the final assembly.

In that last paragraph I skipped a couple of ideas for simplicity's sake. One of which is that a full genome has sections that repeat, thus making it much harder to place a k-mer. The second is that the reading process isn't perfect and errors creep in. Thus the reason for many copies and for the weights.

If, for example, the second sequence above, the "TGAAG" had an error on the first base and it read as "GGAAG" we can see that it wouldn't fit in this sequence. Rather, it would make a "loop" with a low weight. Low weight branches are pruned off in the final assembly.

Even though all of these algorithms are based on the same theory, as in so many things, the devil is in the details. Enough so that there is an annual event where researchers pit their against each other in an "Assemblathon."

Assemblathon 2 (2013) had 43 submissions by 21 teams assembling a fish, a bird and a snake. They narrowed down 100 metrics into 10 categories used for evaluation. The conclusion?

Perhaps I'll have an entry in 2015 or 2016 :)."... the high degree of variability between the entries suggests that there is still much room for improvement in the field of genome assembly..."

References:

Bradnam, K. R., Fass, J. N., Alexandrov, A., Baranay, P., Bechner, M., Birol, İ., ... & MacCallum, I. (2013). Assemblathon 2: evaluating de novo methods of genome assembly in three vertebrate species. arXiv preprint arXiv:1301.5406.

Homologus. (2011-13). de Bruijn Graphs for NGS Assembly. In Homolog.us. Retrieved 11/4/2013, from http://www.homolog.us/Tutorials/index.php?p=1.1&s=1.

Wikipedia. (2013). Seven Bridges of Königsberg. In Wikipedia, the free encyclopedia. Retrieved 11/4/2013, from http://en.wikipedia.org/wiki/Seven_Bridges_of_Königsberg.